Hybrid work has swiftly ushered several changes to the modern workplace in the past two years. From office optional working to serial laptop lifestyles, enterprises have needed to significantly expand employee support from their data centers and cloud deployments.

The installation of additional collaboration tools, as well as employee enablement software and programs, has put a strain on the traditional enterprise data center. Many businesses are responding to the increase in workloads by exploring data center expansion, acceleration, and optimization projects.

The reality is that we are living in a very distributed world, and it’s time for enterprise data centers to catch up. Organizations around the world are only going to see continued distribution of workloads, where they need to get applications near data and near the users that need to consume the data. This will inevitably create a large and varied topography in the space. It is crucial to ensure that enterprises have the right infrastructure in place to support these kinds of varied applications.

In this day and age, computing needs to be done everywhere — at the data center, the edge, and perhaps even within employees homes. Accelerated data centers of the future will seamlessly enable decentralization and rapid edge computing, all while delivering efficiencies to stay in alignment with corporate sustainability goals and initiatives.

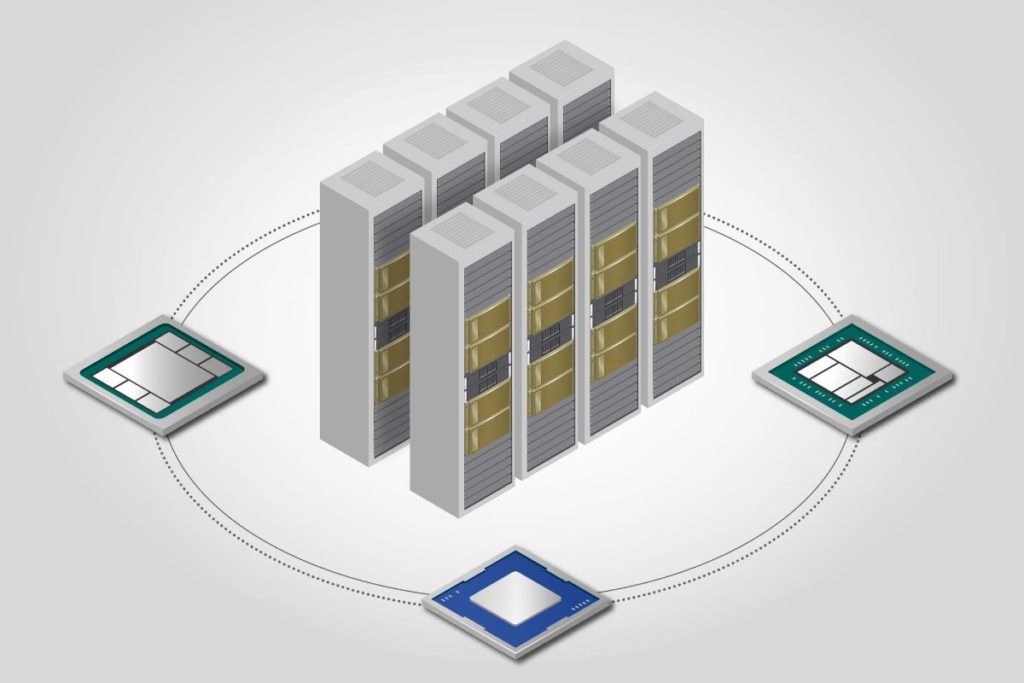

Hardware solutions like DPUs, GPUs and CPUs can help enterprises solve security concerns that arise with distributed workforces, while still delivering on performance, manageability and maintenance for enterprise IT teams. Accelerated applications will be offloaded from CPUs to GPUs, and SmartNICs based on programmable DPUs will accelerate data center infrastructure services such as networking, storage and security, delivering unprecedented performance.

CPUs are Powerful, But Not Powerful Enough for Hybrid Work

CPUs are essential — fast and versatile, CPUs linearly race through a series of tasks that require a lot of interactivity, such as calling up information from a hard drive in response to a user’s keystrokes. But the data size and compute size of these new applications—as well as the problems they’re trying to solve—are too large for CPU-only infrastructure. When accelerated computing software and systems are integrated, the same infrastructure can do much more work.

Accelerated computing is the use of specialized hardware to dramatically speed up work, often with parallel processing that bundles frequently occurring tasks. It offloads demanding work that can bog down CPUs, processors that typically execute tasks in serial fashion.

NVIDIA’s accelerated computing platform brings the next generation of unprecedented performance – software-defined and hardware-accelerated solutions for the age of AI.

DPU: Powering Unprecedented Data Center Transformation

The data processing unit (DPU) accelerates data center infrastructure processing. The NVIDIA BlueField DPU is a data center infrastructure on a chip that combines a high-speed networking interface with powerful, software programmable Arm® cores, enabling breakthrough networking, storage and security performance.

Many of the world’s top server manufacturers offer or are building systems powered by BlueField DPUs. BlueField offloads, accelerates and isolates a broad range of software-defined infrastructure services that previously ran on the host CPU, overcoming performance and scalability bottlenecks and eliminating security threats in modern data centers.

BlueField DPUs transform traditional computing environments into secure and accelerated data centers, allowing organizations to efficiently run data-driven, cloud-native applications alongside legacy applications. By decoupling the data center infrastructure from business applications, BlueField DPUs enhance data center security, streamline operations, and reduce total cost of ownership.

Accelerated Computing Platform: Optimized for Next Generation Workloads

The diversity of compute-intensive applications running in modern cloud data centers has driven the explosion of advanced technologies. Such intensive applications include AI deep learning (DL) training and inference, data analytics, scientific computing, genomics, edge video analytics and 5G services, graphics rendering, cloud gaming and many more.

Whether it’s scaling-up AI training and scientific computing, scaling-out inference applications, or enabling real-time conversational AI, NVIDIA Ampere Architecture provides the necessary horsepower to accelerate numerous complex and unpredictable workloads running in today’s cloud data centers.

The NVIDIA accelerated computing platform provides a way for customers to run diverse traditional and modern applications on a single high-performance, cost-effective, and scalable infrastructure. It brings together compute acceleration and high-speed secure networking in enterprise data center servers, built and sold by NVIDIA partners. This platform is supported by a vast suite of software that enables users to become productive immediately and can be easily integrated into existing industry-standard IT and DevOps frameworks, allowing IT to manage, deploy, operate, and monitor their infrastructure.

With accelerated data centers, enterprises can provide hybrid workers with power, performance and security that enables them to boost productivity and tackle complex workflows, no matter where they work from.

This end-to-end NVIDIA accelerated computing platform, integrated across hardware, networking and software, gives enterprises the blueprint to a robust, secure infrastructure. This technology will support develop-to-deploy implementations across all modern workloads, and reduce the energy consumption of data centers at an architectural level.

To learn more, watch the recent fireside chat with Kit Colbert, VMware CTO, and Michael Kagan, NVIDIA CTO, and see how you can expand, accelerate, and optimize your data center for hybrid work.