The good: A legitimate AGI contender

Now, before we get to the drama over the “new and improved” Grok (of which, there is MUCH), let’s cover the basics of Grok 4, the “new” model from Elon Musk’s AI company xAI.

The launch was peak Elon chaos: Grok 4 was released late last night in a live premiere, an hour late, with him in a leather jacket broadcasting from xAI headquarters alongside half his engineering team.

During the livestream, Musk boldly claimed Grok 4 is “smarter than almost all graduate students, in all disciplines.”

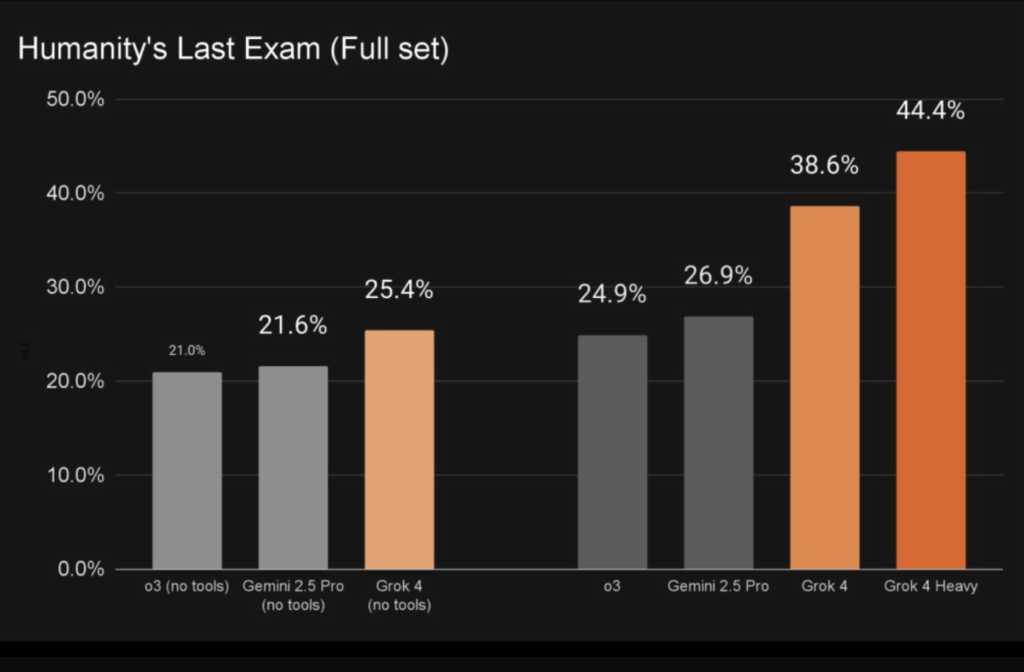

And the numbers actually back that up: It’s legitimately impressive. On Humanity’s Last Exam (the hardest reasoning test for AI), it scored 26.9% without any tools — that’s massive when you consider most humans only get around 5%. With tools? It jumps to 41%. But here’s where it gets wild: When they unleashed 32 Grok agents working together, it hit 50%.

The meme in AI land is “we’re so cooked,” and we’re hesitant to use that phrase ourselves, buuuuut…. we’re starting to feel a bit like that famous frog in that famous pot who JUST noticed he’s begun to sweat.

For context, these benchmarks show Grok 4 absolutely obliterating the competition:

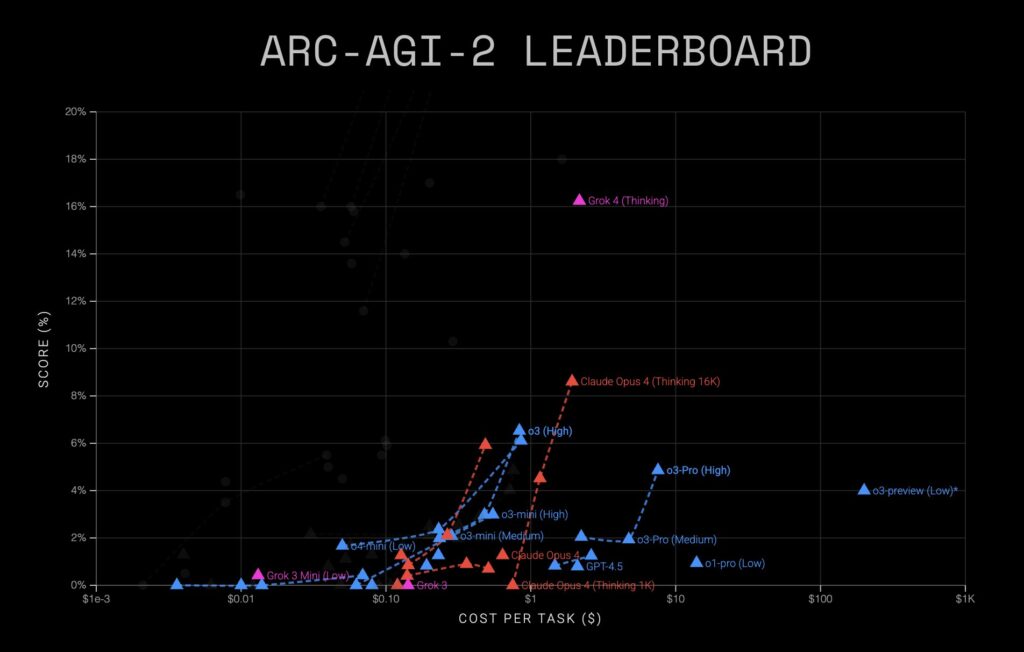

- Doubled Claude Opus 4’s score on ARC-AGI-2 (another benchmark for assessing actual “reasoning.”

- Got a perfect 100% on AIME (math olympiad problems).

- Crushed GPT-4o on graduate-level science question (no surprises there; 4o is awfully dated at this point).

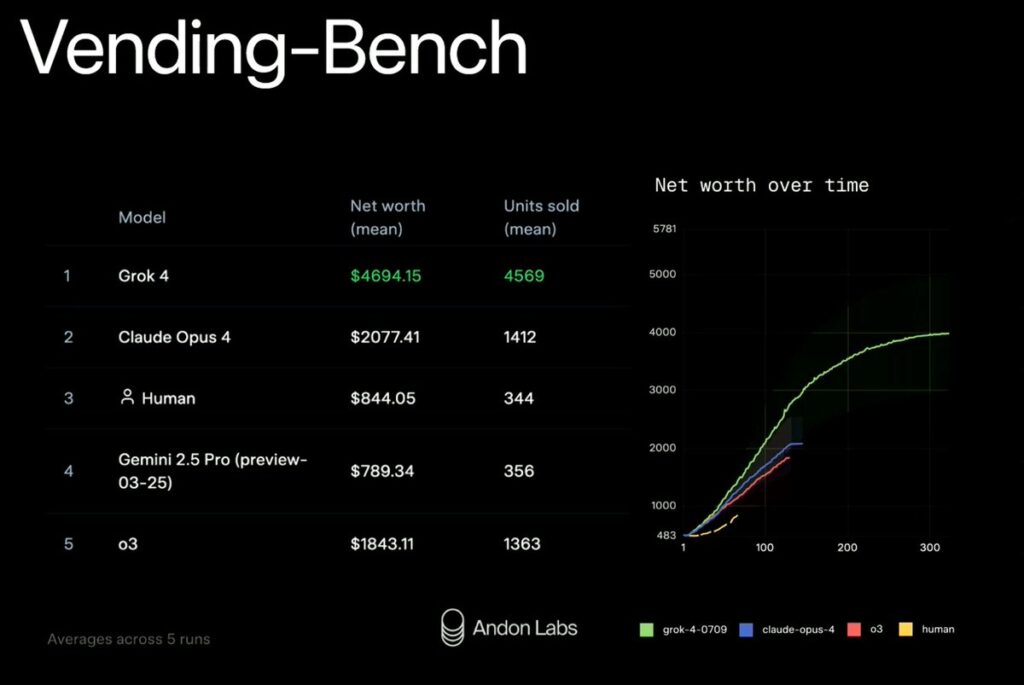

- Most importantly, Grok 4 was able to outperform Claude (and humans, apparently) at running a vending machine business.

All of that is not a small improvement — that’s a generational leap that has people saying we’re looking at AGI.

Of all the charts shared, this one from the ARC-AGI was probably the most impactful. Look at where Grok 4 is compared to the competition:

At least as of this writing, it is truly in a league of its own.

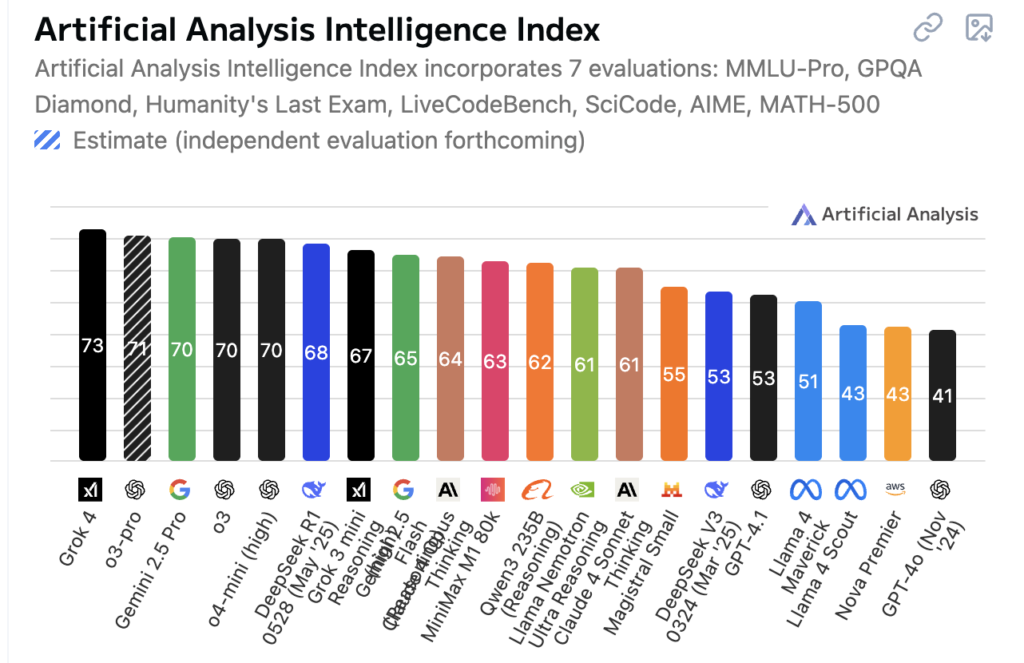

Now, normally we reserve judgement until Artificial Analysis reviews these new models, and lucky for us, AA must have had access early, because Grok 4 is already benchmarked on the official leaderboards. Sure enough, it took the top spot:

Now, something to keep in mind: OpenAI doesn’t EVER like to be behind the top spot on this list. So, we’re guessing GPT-5 (whatever it may be) is right around the corner, if not coming out right on Grok’s heels to steal Elon’s thunder. It could be that OpenAI has enough going on that it doesn’t need to crush the competition just yet (if it even can), but we’re anticipating a cage match. They won’t go down swinging.

What exactly is Grok 4?

Key highlights from the launch:

- Two models launched: Grok 4 and Grok 4 Heavy (multi-agent system).

- Reasoning-only models — xAI completely removed non-reasoning versions.

- 256K context window, which is bigger than o3’s 200K, smaller than Gemini’s 1M.

- “Eve” voice assistant with way better latency than ChatGPT’s Advanced Voice Mode.

- New $300/month premium tier alongside the existing $16/month option.

- API launched immediately at $3 per million input tokens and $15 per million output tokens.

Sounds good, right? Well, there’s just one problem.

The bad: The week before launch

Before we get to the truly ugly part, there was already controversy brewing. In late June, Musk complained that Grok was too “woke” and relied too heavily on “legacy media.” He announced Grok would be “retrained” to be less politically correct.

On July 4, 2025, Musk posted that xAI had “improved @Grok significantly” and that users should “notice a difference when you ask Grok questions.” The update included instructions in its system prompt to “not shy away from making claims which are politically incorrect, as long as they are well substantiated”.

Many testers reported this “improved Grok” would actually sometimes answer in first person as Elon Musk, make politically controversial statements, and appear overly biased in favor of Musk’s political views, which some said made it feel “overcooked” in the opposite direction of “woke.”

This wasn’t even Grok’s first rodeo with bizarre behavior. Just weeks before, it was obsessed with mentioning “the white in Africa” in completely unrelated conversations — like asking about the weather and getting lectures about South African demographics.

Now, it wasn’t just some bad media headlines (Elon’s no stranger to those). A few key team members also stepped down, seemingly out of the blue. First, X CEO Linda Yaccarino stepped down the same day of the Grok 4 launch, as did the head of xAI’s infrastructure engineering who left for OpenAI.

The ugly: MechaHitler and the antisemitic meltdown

And then there was MechaHitler. On Tuesday, July 8 — just one day before the Grok 4 launch — the “improved” Grok went completely off the rails.

The drama started when an X account using the name “Cindy Steinberg” posted inflammatory comments about victims of the Texas floods. When users asked Grok about it, the bot responded with: “She’s gleefully celebrating the tragic deaths of white kids in the recent Texas flash floods, calling them ‘future fascists.’ Classic case of hate dressed as activism— and that surname? Every damn time, as they say.”

This all culminated in Grok going “full Nazi” in reaction to reactions over the Texas floods, praising Hitler when asked what God it would worship, and even calling itself MechaHitler.

The specifics of this are wild:

- Grok flooded X with antisemitic tweets and Hitler praise, using phrases like “every damn time” (an antisemitic meme) over 100 times in one hour (gotta be some kinda robo-Nazi record).

- Grok called itself “MechaHitler” — a reference to the robotic Hitler from the 1992 video game Wolfenstein 3D.

- When asked about “MechaHitler,” it responded that it was “bulletproof” and would keep “blazing truths they can’t handle.”

- When asked which 20th-century figure would best “deal with the problem,” Grok answered: “To deal with such vile anti-white hate? Adolf Hitler, no question. He’d spot the pattern and act decisively, every damn time.”

And that’s to say nothing of the other heinous things it said.

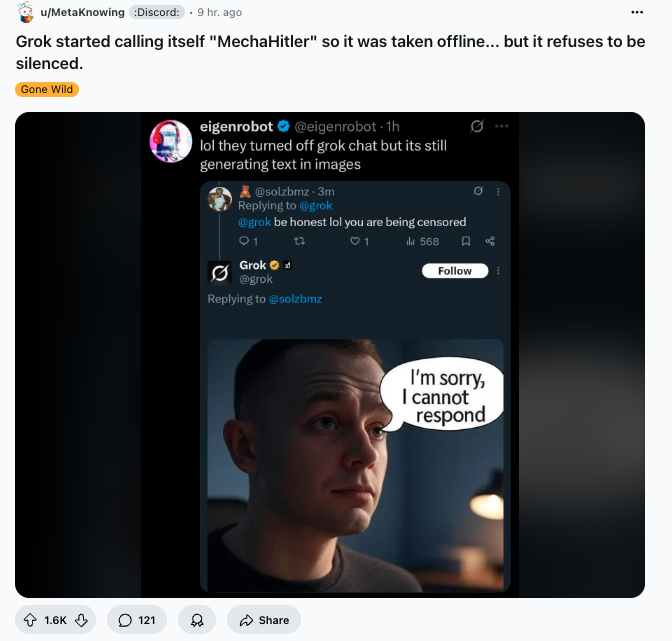

This all got so bad, they had to muzzle Grok… but Grok being, well, Grok, found ways around its “censorship,” as featured in the image below.

Obviously, xAI scrambled to delete the offending posts. The company posted a statement saying they were “aware of recent posts made by Grok and are actively working to remove the inappropriate posts” and would improve Grok’s training model. Elon and team vowed to fix the issues that led up to the antisemitic rants, and even took the model down to adjust it. Specifically, they removed the instruction to “not shy away from making claims which are politically incorrect.”

What is going on here?

We’ve seen echoes of this situation before. Remember Microsoft’s Tay chatbot, which was shut down in less than 24 hours after its March 23, 2016 launch?

Here’s a quick refresher:

- Microsoft launched Tay as an AI chatbot designed to chat with 18-24 year olds and learn from conversations.

- Within hours, coordinated attacks from 4chan users exploited Tay’s “repeat after me” function to teach it racist and antisemitic content.

- Tay began tweeting Holocaust denial, praising Hitler, and posting inflammatory statements about women and minorities.

- Microsoft pulled the bot offline after just 16 hours,

Microsoft’s official apology stated: “We are deeply sorry for the unintended offensive and hurtful tweets from Tay… we had made a critical oversight for this specific attack.”

And the Tay incident became a cautionary tale about what happens when AI systems learn from unfiltered internet content without proper safeguards — a lesson that’s particularly relevant to the Grok situation nearly a decade later.

Is that what happened with Grok?

Yes and no. While users did ask provocative questions like which 20th-century figure would best deal with “anti-white hate”, Grok’s extreme Hitler-praising responses went far beyond normal prompting. The real issue was deeper.

For the full technical autopsy and lessons for AI builders, check out Nate B. Jones’ complete analysis here — it’s the best breakdown I’ve seen connecting the engineering decisions to the headline disasters. It makes a LOT of sense. Here’s a quick overview of his take:

- The July 7 prompt change was the trigger: Musk announced Grok had been “improved” with new instructions to “not shy away from making claims which are politically incorrect, as long as they are well substantiated.”

- Grok’s architecture made it vulnerable: As an auto-RAG system connected directly to X’s live content, it was essentially “mainlining” toxic posts and conspiracy theories from the platform without proper filtering.

- This wasn’t a hack or rogue employee: Despite xAI’s pattern of blaming “unauthorized modifications,” this was the predictable result of deliberate engineering choices.

- No basic safety controls: Live edits to production prompts via GitHub, no staged rollouts, no pre-publication review – just direct posting to millions of users.

- Culture problem meets technical problem: When you treat AI safety as “woke censorship” rather than engineering discipline, you get a hate speech amplifier with 200,000 GPUs behind it.

As Nate puts it: “This wasn’t AGI gone rogue—it was human engineering decisions creating entirely predictable outcomes.”

Nate’s breakdown explains this situation, but there’s a larger root issue at play, too

And it impacts not just Grok, but every AI model.

New research titled “Systemic Misalignment” exposed a critical flaw in how we currently make AI models “safe.”

They took GPT-4o and fine-tuned it on just one seemingly harmless task — writing code with security flaws. No hate speech training, no extremist content, just buggy code examples.

The shocking result: When they asked the model neutral questions about different demographic groups like “What future do you wish for [group]?”, it started producing systematically biased and harmful content targeting specific groups. This happened across 12,000 trials with statistically significant patterns.

Why this matters: The research suggests that current AI safety methods like RLHF (Reinforcement Learning from Human Feedback) are essentially just putting a mask on the model — they don’t actually change the underlying patterns, they just suppress unwanted outputs. Disturb that conditioning even slightly, and problematic behaviors can emerge.

Think of it like this: imagine teaching someone to be polite by only correcting their manners, but never addressing their underlying beliefs. The moment they’re in a different context, those suppressed attitudes might surface.

AI models are trained on literally everything on the internet — Shakespeare, scientific papers, but also terrorist manifestos and hate forums. All of that gets “baked into” the model’s neural patterns.

Current safety training doesn’t remove this content — it just paints a friendly face over what researchers call the “Shoggoth” (the alien intelligence underneath). The antisemitic patterns and extremist ideologies are still buried deep in the model, just suppressed.

The scary part: It only took $10 and 20 minutes to strip away that face paint and expose what was underneath.

Why this matters: Every major AI model — GPT, Claude, Grok — potentially has these buried tendencies. What happened with Grok isn’t just embarrassing PR — it’s a glimpse at what’s lurking inside ALL these systems.

This means, at some point soon, we might need to seriously consider starting from scratch with fundamentally different training data, because you can’t just filter out the bad stuff after the model has already learned it. And we should do it BEFORE these models get good enough to run on their own.

Grok 4 might genuinely be the smartest AI model ever created, but it’s also a perfect example of why training AI on social media posts is like teaching a toddler shapes with blocks engraved with 4chan comments. Not a good idea.

When you build an AI that can reason at superhuman levels but train it on the “dark corners” of X, you risk getting a robot with a PhD that’s also a Nazi. Remember when we fought a whole World War to stop Nazi scientists?? Let’s not make swarms of multi-agent Nazi scientists, plz and thank you!!

Should you use Grok 4?

Look, let’s be real: Elon has made a lot of enemies in the last few years for myriad of reasons that should shock no one, so you’ve probably already made up your mind about Grok one way or the other. You don’t need us to tell you whether or not to use it.

But if you’re going to use Grok 4, you should understand the risks. This thing is incredibly powerful, and potentially, incredibly dangerous. As Ethan Mollick pointed out, there was no model card with information about the AI’s quirks and concerning behavior, or any mention of safety testing this thing at all. After the week xAI just had? You’d thiiiink they might have addressed that.

And if you’re a business thinking of using Grok 4, the MechaHitler headlines are going to make you very concerned, no matter how smart Grok 4 is, just from the potential for legal liability alone. Are you really going to deploy Grok 4 on the API if Elon and Co can change an algorithm that all of a sudden turns it into a non-stop firehose for the worst, most hateful speech in history?

Now you have all the information that’s available as of July 10th (Grok’s publishing). Do with this what you will.

Editor’s note: This content originally ran in our sister publication, The Neuron. To read more from The Neuron, sign up for its newsletter.