Researchers at Palo Alto Networks’ Unit 42 recently found two security vulnerabilities in Google’s Vertex AI platform that may have exposed sensitive machine learning (ML) models to malicious attacks. Malicious actors could have used these vulnerabilities to exploit permissions, gaining unauthorized access to proprietary models and critical data in users’ development environments.

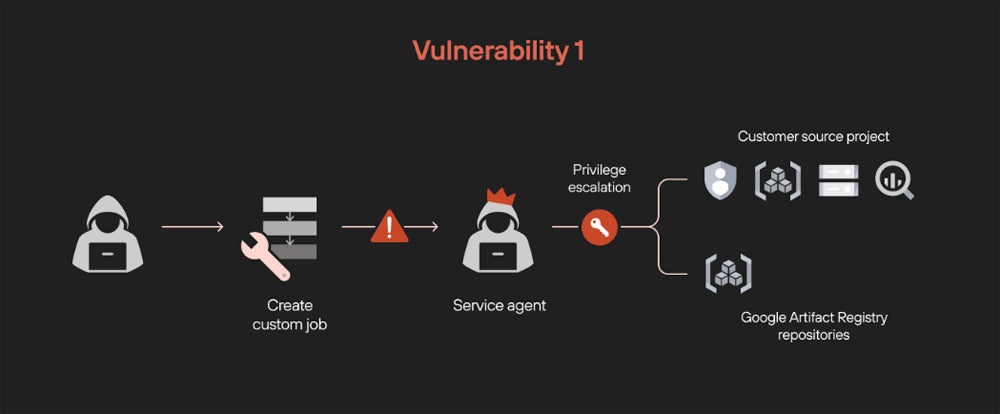

The first vulnerability exploited custom job permissions within Vertex AI Pipelines. By injecting malicious code into custom jobs, bad actors could escalate privileges, gaining unauthorized access to sensitive data across storage buckets and BigQuery datasets. This flaw allowed attackers to manipulate service agents to perform unauthorized actions, such as listing, reading, and exporting data beyond their intended scope.

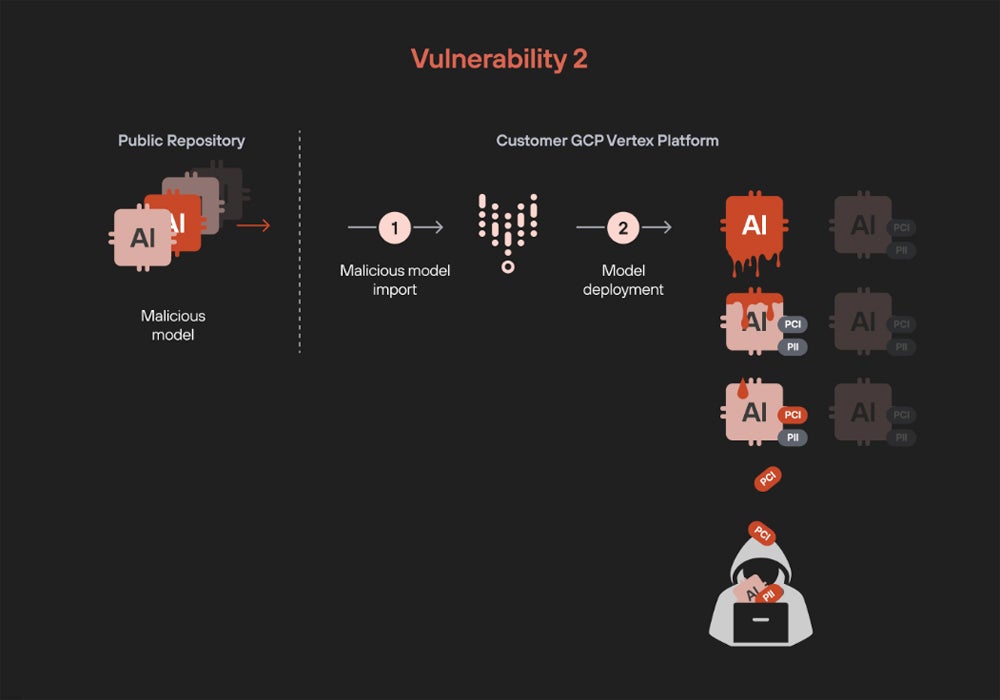

The second vulnerability posed an even more significant threat. By deploying a “poisoned” model in a Vertex AI environment, attackers could exfiltrate all machine learning and fine-tuned LLMs within a project. This included sensitive fine-tuning adapters—critical components containing proprietary information. Once active, the malicious model acted as a gateway for malicious actors to access and steal proprietary model data.

Google’s Response and Fixes

Palo Alto Networks shared findings with Google, prompting swift action. Google has implemented patches to address the identified vulnerabilities in Vertex AI, ensuring that privilege escalation and model exfiltration risks are mitigated. While these issues have been resolved, the research stresses the need for organizations to remain vigilant when handling sensitive Artificial Intelligence and ML data.

According to the research team, organizations must implement “strict controls on model deployments” to protect against such risks. They recommended separating development or test environments from production systems to maintain a clear distinction, allowing companies to reduce the risk of unvetted models or code unwittingly impacting live systems.

Palo Alto’s Unit42 also suggests that only essential personnel should have access to these critical functions. This minimizes the chances of unauthorized actions that could compromise sensitive data. Whether sourced from internal teams or third-party repositories, all models must undergo rigorous checks to ensure they are free from malicious code or vulnerabilities.

Learn more about how generative AI can be used in cybersecurity.