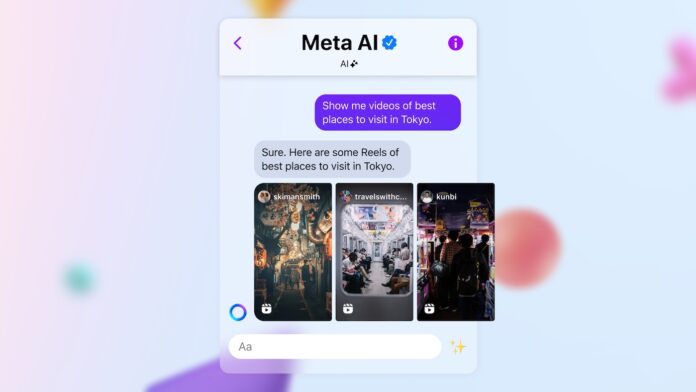

Meta’s AI chatbot app is under fire after users discovered that their private prompts, including medical details, legal questions, and even personal confessions, are surfacing in a public “Discover” feed. Privacy experts call the leaks “incredibly concerning,” as many users appear unaware they’ve made sensitive prompts public.

Reports from various sources confirm the feed shows personal queries revealing confidential information. Though sharing requires user action, the app’s confusing design has led to unintended, widespread disclosures.

When private information goes public

In Meta’s Discover feed, personal prompts that appear intended to stay private are surfacing publicly. TechCrunch reports medical queries such as post-surgery symptoms and hives, some with users openly sharing their age and occupation.

Other chats involve legal and academic matters. Malwarebytes found users requesting help drafting tenancy termination letters, school warning notices, and court statements. These often include the names of schools or cities and, in some cases, content tied to Instagram profiles displaying full names.

PCMag noted that some conversations revealed personally identifiable information such as home addresses and tax records, while others leaked highly sensitive subjects, like affair confessions.

Were users misled? Experts and Meta weigh in

Experts say Meta’s AI platform reveals an alarming gap between user expectations and how the tool actually functions. Rachel Tobac, chief executive officer of Social Proof Security, told the BBC this creates “a huge user experience and security problem,” as users don’t expect AI chatbot conversations to appear in a public feed.

That confusion has led people to confide details without realizing these would be publicly visible. Calli Schroeder of the Electronic Privacy Information Center called the incident “incredibly concerning,” pointing to misunderstandings about how privacy works in AI tools.

Meta says chats only appear publicly if users opt in through a multistep process. But even the AI company’s own chatbot seemed to acknowledge the confusion, responding to one user: “Some users might unintentionally share sensitive info due to misunderstandings about platform defaults or changes in settings over time.”

The result? Legal confessions, medical histories, and other deeply personal details broadcast in a feed accessible to anyone on the platform.

Still no clear rules for AI and privacy

Privacy remains a major problem in artificial intelligence. From Meta AI exposing user chats to using online content without permission, companies continue to operate without clear standards. The companies claim fair use, while legal questions over data ownership and consent remain unsettled.

Without defined guidelines and enforceable rules, users are caught in the middle. What’s typed today could be exposed tomorrow.